The Unofficial KubeCon EU '26 SRE Track

6 talks to add to your schedule

SRECon EMEA lands in Dublin this October 7–9, and it’s shaping up to be the most AI-heavy SRECon yet. Earlier this year, the Americas edition barely scratched the surface with four AI-related talks. In Dublin, that number jumps to 16 sessions, all probing the uneasy but inevitable relationship between AI and reliability.

This expansion mirrors a broader industry reality: AI adoption is accelerating, and the reliability questions are multiplying just as quickly. How do you monitor systems powered by opaque models? How do you scale infrastructure when workloads shift unpredictably? SREs are being asked to answer these questions while keeping the lights on.

Of course, you can’t catch everything: SRECon’s agenda is packed. But if you want a shortcut to the sessions most likely to spark ideas (or debates) long after Dublin, these are the ones we think are worth circling in your schedule.

Sylvain Kalache (Rootly) & Maria Vechtomova (Marveloud MLOps)

Tuesday, 7 October - 11:00

The duo explore how transformations in SDLC (continuous integration, deployments, monitoring, scaling) are being reshaped by ML/LLMs. They also dive into whether the hype lives up to real-world pain points. What’s new? What’s unchanged? What feels like marketing spin? Great for platform engineers and SREs who need to figure out where to double-down on tooling.

Derek Chamorro (Together AI)

Tuesday, 7 October - 11:50This talk zeroes in on the darker side of AI reliability: prompt injection, adversarial inputs, and model poisoning. Derek Chamorro shares how his team is tackling these risks with layered defenses like input filtering, sandboxing, randomness, and federated learning. Plus, how those practices tie directly into incident response and zero-trust architectures. It’s a rare mix of security and SRE that delivers practical techniques you can actually apply.

Sylvain Kalache (Rootly)

Wednesday, 8 October - 13:50

AI-assisted coding is fast gaining traction, but there’s a flip side: tests that echo bad logic, dependencies you didn’t pick, hallucinated code, and more churn. Sylvain takes you through the fallout: more frequent incidents, difficult debugging, accountability getting fuzzy. If your org is embracing Copilot, LLM code-assist, or even internal generation of scaffolding/code templates. This talk helps you see where the potholes are. Also useful for defining guardrails so that speed doesn’t eat reliability.

Lerna Ekmekcioglu (Clockwork.io)

Wednesday, 8 October - 14:40

Think of this as the performance-under-pressure talk: what happens when AI workloads meet imperfect networks, unstable infrastructure, intermittent failures. Lerna digs into how delays, drops, or “glitches” in the network fabric (NIC/link flaps, etc.) can undermine everything. If your stack runs big-model training, large data movement, or anything that can’t just “retry later,” this is one to watch closely.

Jorge Lainfiesta (Rootly) & Leena Mooneeram (Chainalysis)

Wednesday, 8 October - 17:10

This is for when you’re grappling with the age-old tension: feature teams that just want to ship vs central SRE teams trying to enforce reliability. The hybrid “Platform SRE” model they propose offers a path: self-service reliability via platform tooling plus embedding temporarily in feature teams for high-leverage reliability work. This talk gives concrete options (and pitfalls) for scaling reliability without bottlenecks.

Bholanathsingh Surajbali and Nicholas Nicholas McKenzie (Mauritius Commercial Bank)

Thursday, 9 October - 13:50

In banking, AI systems are no longer in the lab, they’re making decisions in credit, fraud, risk and everything in between. Surajbali & McKenzie dig into “AI observability”: going beyond metrics, logs, and traces to include audit trails, explainability, and data monitoring that really matter in high-stakes regulated environments. They walk through a real-world case study where AI observability didn’t just catch noisy alerts, but uncovered a silent, critical issue and helped restore trust.

After a full day of talks, counter-intuitive failures, and AI-driven reliability insights, we’ve got just the thing to reset: the Happy Hour w/ Rootly AI, Chronosphere & Cloudsmith. Whether you want to swap war stories from the incidents that caught you off guard, crowdsource best practices from the sessions you loved, or just kick back with a drink in hand and let the summit energy settle in, this is the place.

Be sure to register, the exact location comes with approval. (Bring your curiosity; leave your slide deck behind… at least for a few hours.)

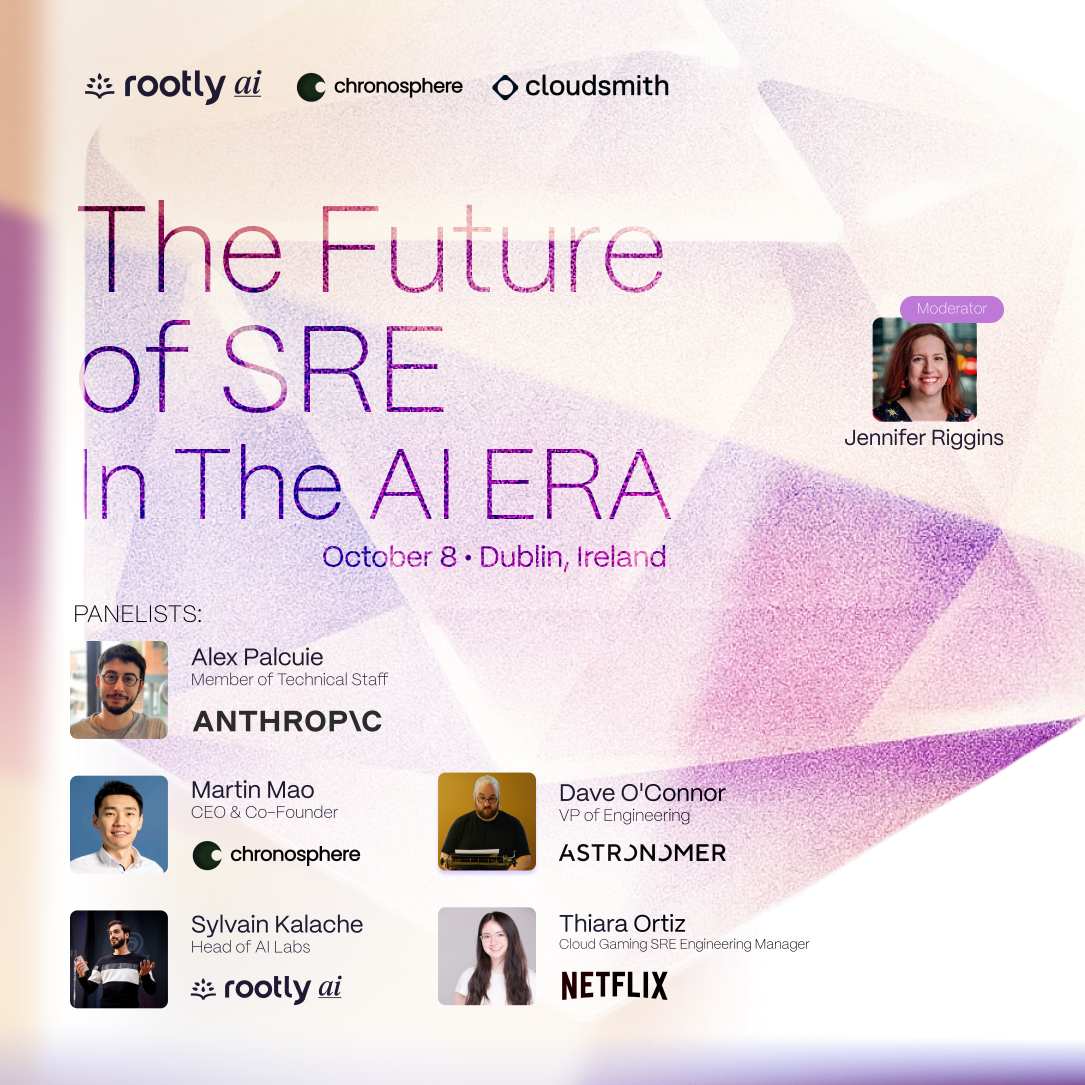

Day 2 wraps with a heavy hitter: The Future of SRE in the AI Era, a panel hosted by Rootly featuring voices from Anthropic, Netflix, Astronomer, Chronosphere, and Cloudsmith.

Think of it as the moment to zoom out and ask the big questions: How is AI redefining what it means to be “reliable”? Where do humans stay essential in alerting, remediation, and decisions? What ethical trade-offs are showing up now that AI is baked into observability and incident response?

Moderated by Jennifer Riggins, this session isn’t just about hearing stories. It’s the chance to grill experts, compare emerging norms, and come away with new lenses for tackling your own SRE challenges. Then stick around for the networking reception afterward: that’s where the real insights often bubble up over conversation and a drink.

SRECon EMEA is packed, but the real trick is knowing where to lean in. Whether you’re chasing AI reliability lessons in the sessions or trading notes over a pint after hours, the goal’s the same: walk away with ideas you can actually put to work.