The Unofficial KubeCon EU '26 SRE Track

6 talks to add to your schedule

Anthropic’s latest incident shows the industry a new category of challenges are coming to SREs. As systems become more sophisticated, failure is harder to detect and, even, define.

Because, if you think about it, did Anthropic have a general outage? Were users experiencing latency? Was there a flaky third-party dependency?

No, no, and no.

The issue was that Claude, Anthopic’s flagship model family, was “dumber”. **

But that’s not something monitoring tools in the market offer help you detect.

Around August, the Anthropic team started noticing more and more complaints on Reddit, X, and other social media platforms. Conspiracy theories emerged. Frustrated users were accusing the company of worsening the performance of their models, on purpose, in order to charge higher rates for future releases.

The Anthropic team was perplexed. They would never purposefully degrade a model performance- so much work goes into each release. There must be something going on, even though all the dashboards show green lights.

But, how do you assess that a response is dumber than before? Perhaps the user is not providing the right context, or, the task at hand is not equivalent to a previous one and needs a prompt refinement.

And then there’s the matter of scope. Let’s assume there is an issue you haven’t detected and can’t reproduce. How do you find out if the reported degradation is general? Or if it only impacts a specific cluster of users or use cases?

To make matters more difficult, your privacy policies do not allow you to see the content of the queries or the answers that you give to your users. You’re flying blind.

When Anthropic published its retrospective, I immediately went through it.

Turns out Anthropic’s Claude ran in a degraded state for about a month. You may ask yourself, how is that possible? Claude has a 32% market share in enterprise adoption, beating OpenAI which is only used in 25% of enterprise deployments.

Todd Underwood, Anthropic’s Head of Reliability, wrote a comprehensive incident postmortem of what had happened and why. It’s is not an easy thing to do, considering that to explain the situation, you have lay down the basics on how LLMs are developed, tested, and deployed to an audience who may not be familiar with the matter.

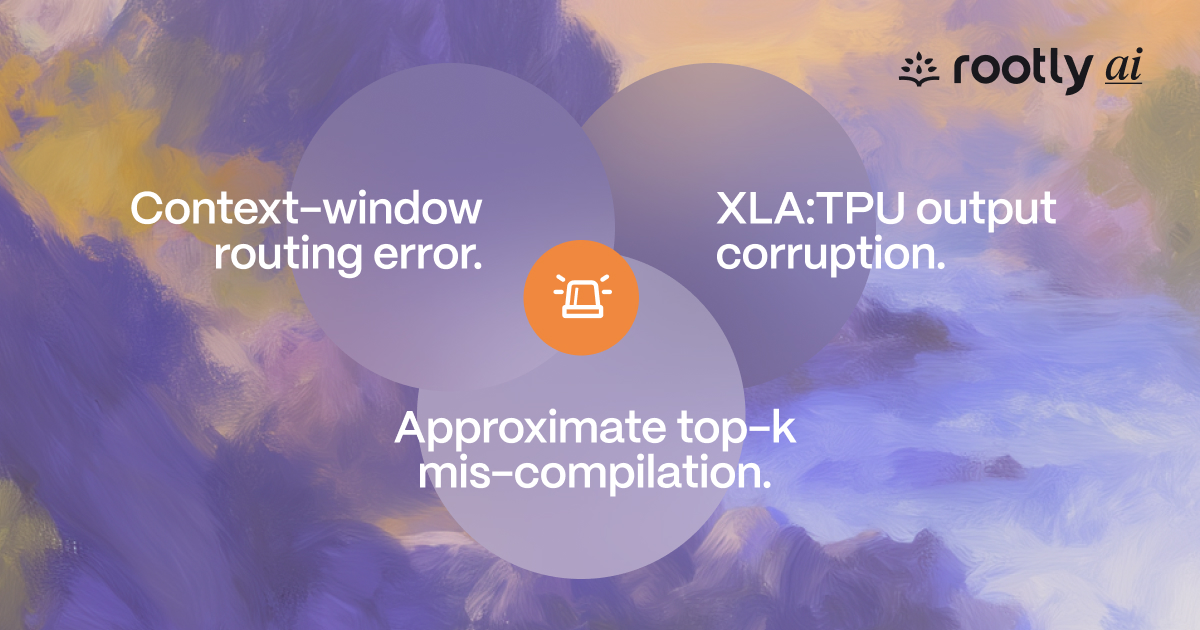

Between August and early September, Anthropic traced three overlapping issues that degraded model performance for a subset of users:

The problems weren’t outages or capacity failures, they were quiet degradations that together impacted user experience but persisted undetected in production.

At Rootly, we see this pattern often across prospective customers and people we talk to in the community: large, distributed systems where everything looks healthy until users start noticing something feels off. Anthropic’s retrospective shows a clear example of how reliability challenges are evolving in the age of AI.

None of these bugs involved model weights or data. They lived in the infrastructure stack: routing, serving, and hardware, which made them especially hard to detect.

Claude is used by 19 million users each month. Claude has three distinct models, each with various editions running in production. LLMs are complex, living, systems that fail differently than more deterministic systems. In the AI world, 200 OK responses do not necessarily mean everything is ok.

Additionally, Anthropic operates across multiple environments (AWS, GCP) and hardware platforms (TPU, GPU, Trainium).

In that setup, it’s entirely possible for one region or backend to degrade while others remain fine.

At Rootly, we see this pattern often across prospective customers and people we talk to in the community: large, distributed systems where everything looks healthy until users start noticing something feels off.

One of the most common reliability failure modes we see as we begin discussions with some of our customers with similar challenges:

By the time engineers can quantify the issue statistically, it’s often been in production for weeks.

What Anthropic encountered mirrors what we hear every week during discussions with the broader engineering community, particularly those running AI inference, ML pipelines, or high-scale SaaS infrastructure.

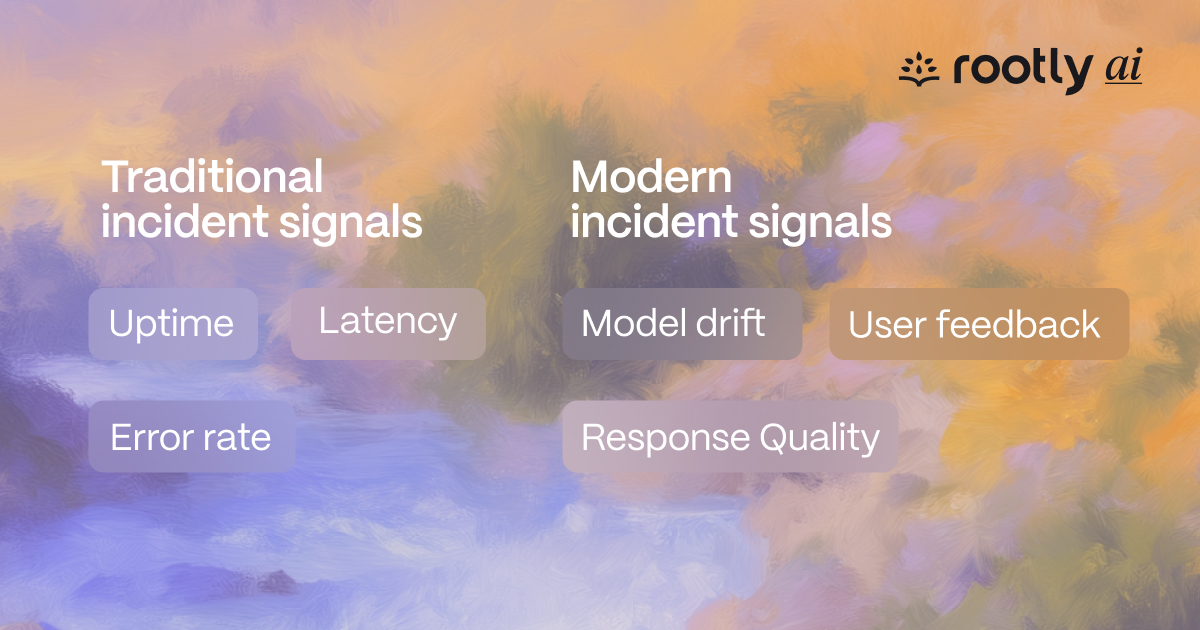

Availability metrics can stay green while model quality quietly declines.

Monitoring for semantic correctness, not just uptime, is becoming a new reliability requirement.

These incidents cut across model-serving, compiler, and infra teams. The faster organizations can establish ownership, the faster they recover.

We consistently see across Rootly customers that cross-functional clarity is the strongest predictor of MTTR.

Small changes like routing rules, compiler flags or load balancer updates, can meaningfully change model behavior.

Reliability engineering now needs to include model-output validation as part of deployment testing.

Internal regression tests rarely capture live-traffic drift. Real-world feedback (user signals, quality scoring, anomaly detection) fills that gap.

Anthropic rolled back a performance optimization because it reduced quality. Mature teams are comfortable trading speed for correctness when the user experience depends on it.

Looking at patterns across Rootly’s customer base, a few themes are becoming universal for reliability teams:

Anthropic’s retrospective is more than an isolated story, it’s a snapshot of how reliability challenges are changing. Failures are no longer just about downtime; they’re about subtle degradation, cross-team complexity, and rapid learning.

Across Rootly’s customer base, we see the same shift. Reliability is expanding beyond incident response to include data correctness, model behavior, and cross-system visibility.

As AI infrastructure becomes more complex, the organizations that succeed will be those that treat reliability not as reactive chaos, but as an ongoing process of observation, correlation, and learning, exactly the mindset reflected in Anthropic’s transparency.