Incidents unfold quickly and rarely follow a predictable path. In the moment, engineers are triaging alerts, switching between dashboards, escalating across teams, and testing theories under pressure. Afterward, one question always surfaces: What exactly happened, and when?

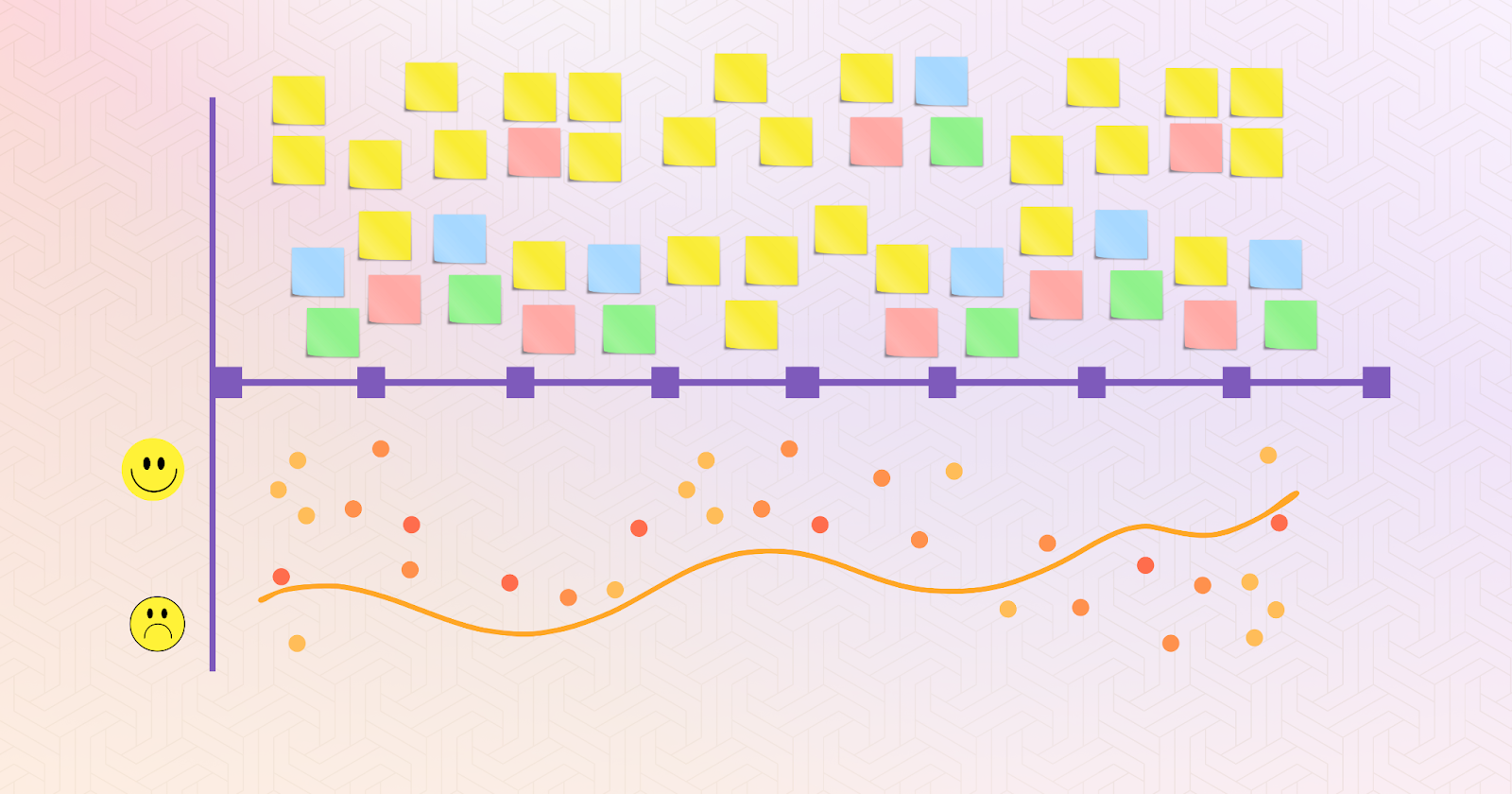

Timeline construction brings order to that chaos. It provides the structure needed to understand an incident clearly, without relying on memory or assumptions. A well-built timeline anchors the postmortem in facts, eliminates hindsight bias, and brings precision to how teams examine system behavior, human response, and communication flow.

Strong timelines turn reactive cleanup into forward-looking insight. They reveal gaps in detection and escalation, highlight delays in decision-making, and expose patterns that written summaries often miss. They are essential for meaningful retrospectives, measurable reliability metrics, and continuous improvement across teams.

If you're serious about reducing MTTR, learning from past incidents, and improving coordination under pressure, start with the timeline. It is not just a record. It is the foundation of every high-functioning incident response process.

What Is an Incident Timeline (and Who Needs It)?

An incident timeline is a structured, chronological record of all key events surrounding an incident—starting from the earliest indicators (like degraded performance or failed health checks), through internal escalations and mitigation steps, to final resolution and recovery. It typically includes:

- Alerts: When and how the first signals surfaced (e.g., monitoring tools, user reports)

- Actions: What responders did and when—queries, rollbacks, restarts, scaling, etc.

- Communications: Who said what and where (Slack, PagerDuty, status pages)

- Changes: Code deploys, infra tweaks, config updates that preceded or followed the issue

- Recoveries: When full service was restored, and what validated that recovery

The timeline is used by multiple stakeholders at different phases:

- Incident responders rely on it in real-time to stay coordinated and avoid duplicated effort.

- Postmortem reviewers analyze it after the fact to understand what unfolded and why.

- Engineering leadership uses it to evaluate response efficiency, accountability, and systemic reliability.

Without this anchor, incident response becomes a game of conflicting memories and scattered data points. With it, teams gain alignment, clarity, and the ability to learn and improve.

Why Accurate Timelines Are Essential

The human brain is a notoriously unreliable event recorder—especially during high-stress, time-sensitive incidents. Relying on memory-based reconstruction after the fact introduces serious risks:

- Sequence confusion: Teams often misremember the order of actions or what triggered what.

- Timeline gaps: Long, unaccounted-for periods skew perceptions of responsiveness.

- False correlation: It's easy to attribute cause to an event that happened after the real root issue began.

These inaccuracies cascade into flawed root cause analysis (RCA) and poorly scoped action items. For example, you might believe the first alert came at 10:03am, but it actually fired at 9:52am and was missed. That 11-minute gap could be the key to reducing MTTA (Mean Time to Acknowledge)—but you won’t fix what you never measured.

Worse, when timelines are wrong, retrospectives shift from blameless postmortems to finger-pointing or vague platitudes. Learning gets replaced by storytelling—and not the useful kind.

Real-Time Visibility During an Incident

The value of a timeline isn’t limited to hindsight. During the incident, a shared, live-updating timeline acts as the connective tissue across functions and tools.

In a typical response scenario, people are scattered across:

- Slack threads

- PagerDuty war rooms

- Datadog or Grafana dashboards

- Google Docs

- Statuspage updates

Without a single source of temporal truth, the result is drift—both in understanding and action.

A unified timeline enables responders to ask better questions:

- “Has this alert already fired before?”

- “Did the rollback finish before the error rate dropped?”

- “Are we waiting on the DB team, or did they already restart the replica?”

This reduces confusion, shortens time to resolution, and ensures that leadership, customer support, and comms teams are all on the same page.

Better Postmortems Start With Better Timelines

Every strong postmortem is grounded in a clear, neutral retelling of what happened when. That story begins with a timeline.

When timelines are constructed well, they:

- Enable blameless analysis: Focus on sequences and systems, not individual judgment.

- Reveal patterns: Like recurring delays in escalation, noisy alerts that get ignored, or poor handoffs.

- Support continuous improvement: Action items become specific, measurable, and rooted in real data—not opinion.

For example, seeing that five separate teams engaged before clear ownership was established helps justify the need for better incident command training or tooling—not just more alerts.

Over time, maintaining consistent, high-fidelity timelines helps organizations compare incidents and detect systemic issues—not just one-off mistakes.

Connecting Timelines to Key Metrics

Timeline data is what unlocks your incident KPIs. Without it, metrics like MTTR (Mean Time to Resolve) or TTD (Time to Detect) become imprecise or misleading.

Key metrics supported by structured timelines include:

- TTD (Time to Detect): How long did the issue exist before the team knew?

- MTTA (Mean Time to Acknowledge): How quickly was it triaged after detection?

- MTTR (Mean Time to Resolve): Total time from detection to confirmed resolution.

- TTC (Time to Communicate): When was the first customer-facing update issued?

These indicators help engineering leaders assess not only system performance but response effectiveness. By measuring these consistently, you can validate the impact of reliability investments—whether that’s better alert tuning, runbook improvements, or response automation.

Chat Logs vs. Structured Timelines

Some teams assume that saving the Slack thread is enough. After all, ChatOps captures everything... right?

Not quite.

Chat logs are unstructured. They contain:

- Back-and-forth messages

- Tangents, typos, theories

- Redundant questions

- Unclear timestamps (especially in long incidents)

What’s missing:

- Context: Was that restart manual or automated? Was that rollback successful?

- Intent: Was the action exploratory or corrective?

- Structure: When did the incident actually start? Who took lead?

This is where structured timeline tools shine. Purpose-built platforms like ours at Rootly go beyond basic logs—we deliver structured, enriched timelines designed for clarity, context, and real-time collaboration.

- Categorized entries (alerts, actions, comms)

- Timestamps with source metadata

- Fields for responder annotations or links to logs/runbooks

- Exportable data for dashboards or audits

The goal isn’t to replace chat—it’s to extract signal from noise and build a durable narrative your team can learn from.

How to Build a Reliable Incident Timeline

Creating accurate timelines shouldn’t be a burden—and it shouldn’t be entirely manual either.

Best practices for building incident timelines:

- Automate the obvious

- Integrate with alerting, deployment, and monitoring tools to auto-ingest events.

- Example: “PagerDuty alert triggered” or “Datadog dashboard anomaly detected.”

- Enrich with context

- Add metadata like severity, affected services, team ownership, and time zone.

- Annotate intentionally

- Human responders should layer in decisions, observations, and uncertainties (“suspected memory leak in node X”).

- Use specialized tools

- Tools like Rootly auto-generate timelines with categorization, filtering, and audit logs.

- incident.io integrates directly into Slack, making annotation and real-time updates seamless.

- Review timelines in retros

- Treat timeline validation as a first-class part of your postmortem. Assign a role to own it. Treat it like you would log data: it’s part of the artifact.

Make Timelines Part of the Process

If you treat timelines as an afterthought, you’ll keep repeating the same mistakes—just with better intentions.

But if you make timeline construction a default part of your incident response culture, the benefits compound:

- Faster incident resolution

- More actionable retros

- Better system visibility

- Shared mental models

- Trust across teams

Think of your timeline not as a log, but as a living system artifact—one that outlasts the incident and enables the next one to go better.

Whether you're managing a 24/7 on-call rotation or building reliability programs at scale, timeline hygiene isn’t optional—it’s operational debt or leverage. Choose wisely.